'Edge of chaos' opens pathway to artificial intelligence discoveries

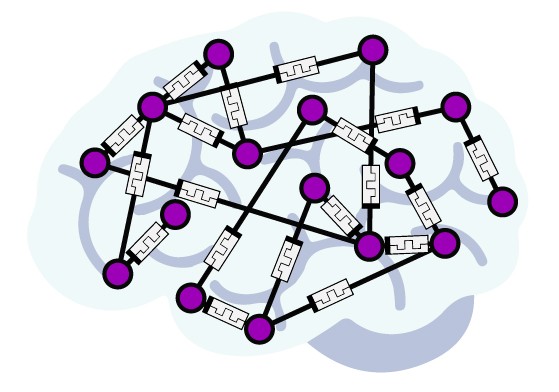

Conceptual image of a neural network (left) next to an optical micrograph image of a nanowire network. Micrograph credit: Adrian Diaz-Alvarez/NIMS Japan

Scientists at the University of Sydney and Japan’s National Institute for Materials Science (NIMS) have discovered that an artificial network of nanowires can be tuned to respond in a brain-like way when electrically stimulated.

The international team, led by Joel Hochstetter with Professor Zdenka Kuncic and Professor Tomonobu Nakayama, found that by keeping the network of nanowires in a brain-like state “at the edge of chaos”, it performed tasks at an optimal level.

This, they say, suggests the underlying nature of neural intelligence is physical, and their discovery opens an exciting avenue for the development of artificial intelligence.

The study is published today in Nature Communications.

“We used wires 10 micrometres long and no thicker than 500 nanometres arranged randomly on a two-dimensional plane,” said lead author Joel Hochstetter, a doctoral candidate in the University of Sydney Nano Institute and School of Physics.

“Where the wires overlap, they form an electrochemical junction, like the synapses between neurons,” he said. “We found that electrical signals put through this network automatically find the best route for transmitting information. And this architecture allows the network to ‘remember’ previous pathways through the system.”

On the edge of chaos

Using simulations, the research team tested the random nanowire network to see how to make it best perform to solve simple tasks.

If the signal stimulating the network was too low, then the pathways were too predictable and orderly and did not produce complex enough outputs to be useful. If the electrical signal overwhelmed the network, the output was completely chaotic and useless for problem solving.

The optimal signal for producing a useful output was at the edge of this chaotic state.

Professor Zdenka Kuncic.

“Some theories in neuroscience suggest the human mind could operate at this edge of chaos, or what is called the critical state,” said Professor Kuncic from the University of Sydney. “Some neuroscientists think it is in this state where we achieve maximal brain performance.”

Professor Kuncic is Mr Hochstetter’s PhD adviser and is currently a Fulbright Scholar at the University of California in Los Angeles, working at the intersection between nanoscience and artificial intelligence.

She said: “What’s so exciting about this result is that it suggests that these types of nanowire networks can be tuned into regimes with diverse, brain-like collective dynamics, which can be leveraged to optimise information processing.”

Overcoming computer duality

Conceptual image of randomly connected switches. Credit: Alon Loeffler

In the nanowire network the junctions between the wires allow the system to incorporate memory and operations into a single system. This is unlike standard computers, which separate memory (RAM) and operations (CPUs).

“These junctions act like computer transistors but with the additional property of remembering that signals have travelled that pathway before. As such, they are called ‘memristors’,” Mr Hochstetter said.

This memory takes a physical form, where the junctions at the crossing points between nanowires act like switches, whose behaviour depends on historic response to electrical signals. When signals are applied across these junctions, tiny silver filaments grow activating the junctions by allowing current to flow through.

“This creates a memory network within the random system of nanowires,” he said.

Mr Hochstetter and his team built a simulation of the physical network to show how it could be trained to solve very simple tasks.

Lead author Joel Hochstetter.

“For this study we trained the network to transform a simple waveform into more complex types of waveforms,” Mr Hochstetter said.

In the simulation they adjusted the amplitude and frequency of the electrical signal to see where the best performance occurred.

“We found that if you push the signal too slowly the network just does the same thing over and over without learning and developing. If we pushed it too hard and fast, the network becomes erratic and unpredictable,” he said.

The University of Sydney researchers are working closely with collaborators at the International Center for Materials Nanoarchictectonics at NIMS in Japan and UCLA where Professor Kuncic is a visiting Fulbright Scholar. The nanowire systems were developed at NIMS and UCLA and Mr Hochstetter developed the analysis, working with co-authors and fellow doctoral students, Ruomin Zhu and Alon Loeffler.

Reducing energy consumption

Professor Kuncic said that uniting memory and operations has huge practical advantages for the future development of artificial intelligence.

“Algorithms needed to train the network to know which junction should be accorded the appropriate ‘load’ or weight of information chew up a lot of power,” she said.

“The systems we are developing do away with the need for such algorithms. We just allow the network to develop its own weighting, meaning we only need to worry about signal in and signal out, a framework known as ‘reservoir computing’. The network weights are self-adaptive, potentially freeing up large amounts of energy.”

This, she said, means any future artificial intelligence systems using such networks would have much lower energy footprints.

Declaration

The authors acknowledge use of the Artemis High Performance Computing resource at the Sydney Informatics Hub, a Core Research Facility of the University of Sydney.